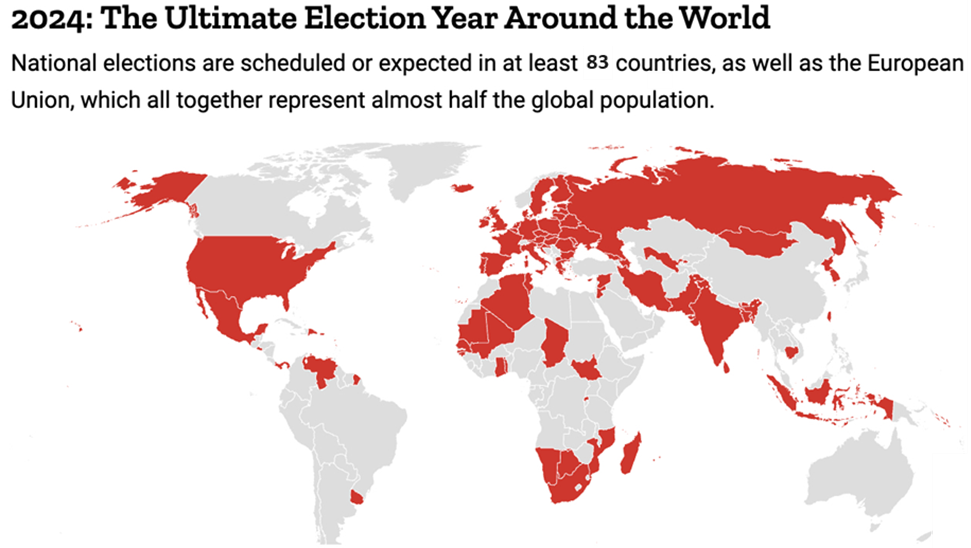

WITH ELECTIONS IN AT LEAST 83 COUNTRIES, WILL 2024 BE THE YEAR OF AI FREAK-OUT?

Relevance:

- GS 2 – Important aspects of governance, transparency and accountability

- GS 3 – Science and Technology- developments and their applications

Why in the News?

- Time magazine dubbed 2024 as the “ultimate election year,” marking the largest global democratic exercise with nearly half the world’s population participating.

- Concerns arise not only from traditional election issues but also from emerging digital threats, exacerbated by the AI frenzy of 2023.

- Run-of-the-mill election demons, such as voter fraud, campaign finance issues, and voter suppression, persist as ongoing concerns in 2024.

Concerns of AI Advancements

- The surge in AI advancements during 2023 has instigated concerns that AI will amplify election-related misconduct in 2024.

- There is apprehension that AI technologies will exacerbate the proliferation of deepfakes, disinformation campaigns, robocalls, and other nefarious methods of digital voter manipulation.

Regulatory Responses to AI Disinformation:

Governments worldwide have swiftly responded to the challenges posed by AI-generated disinformation.

- European Regulatory Approach:

- European governments, known for their emphasis on regulation, have been proactive in formulating rules to address AI-related disinformation.

- American Regulatory Stance:

- In contrast, the United States, characterized by a more hands-off regulatory approach, has also initiated measures to counter AI-induced disinformation.

- India’s Response:

- India’s Minister of State for Electronics and IT, Rajeev Chandrashekhar, has claimed an early awakening to the dangers of AI and has been instrumental in proposing regulations to combat AI-generated misinformation.

- Concerns about Rushed Regulations: There is a concern that in the rush to combat AI’s negative impacts, especially during election seasons, hastily crafted regulations may inadvertently exacerbate broader AI-related challenges.

THE CONCERNS WITH AI IN ELECTION YEAR

Impact of Disinformation Surge:

- Delayed Response to Manipulated Content:

- Example of Tarique Rahman’s manipulated video suggesting a controversial stance highlights the threat of disinformation during elections.

- Meta’s slow response to removing the fake video underscores challenges in content moderation due to staff reductions.

- Meta’s content moderation staff reductions, driven by layoffs in 2023, have strained their ability to address disinformation effectively.

- Limited resources force platforms to prioritize responses based on market significance, potentially leaving less influential regions vulnerable.

- Potential for Increased Disinformation:

- Pressure from powerful governments like the US, EU, and India may result in disproportionate focus on certain regions during elections.

- With at least 83 elections scheduled this year globally, the strain on content moderation resources may lead to an overall surge in disinformation, exacerbating the problem.

- The irony lies in the possibility that the pressure to combat disinformation from a few influential governments could paradoxically lead to an overall increase in disinformation worldwide.

Impact of Reinforced AI Industry Concentration:

- Concentration of Investments:

- Dominance of a few companies like OpenAI, Anthropic, and Inflection in generative AI investment highlights industry concentration.

- Majority of investments in these companies originate from tech giants like Microsoft, Google, and Amazon, further consolidating their influence.

- Regulatory Implications:

- Regulations such as EU mandates and White House Executive Orders introduce measures like AI content watermarking and red-teaming exercises to enhance safety and security.

- However, these requirements pose challenges for smaller companies, particularly in terms of verifying sourced content and managing the costs associated with compliance.

- Barriers to Entry:

- Stringent regulations may create barriers to entry for smaller startups, limiting competition and innovation in the AI industry.

- Concentration of power within a few companies could result in ethical lapses, unchecked risks, and biases, ultimately influencing consequential decision-making processes.

Challenges with Ethical Guidelines:

- Diverse Ethical Perspectives:

- In a polarized society, determining whose ethics and values should shape AI frameworks poses a significant challenge.

- Disagreements over fundamental ethical questions, such as the prioritization of free speech versus protective measures, further complicate the development of universal guidelines.

- Controversy over Risk Prioritization:

- Prioritizing regulation based on perceived levels of risk, as proposed by EU regulations, sparks debate.

- Some view AI’s risks as existential, while others argue that focusing on these risks may divert attention from more immediate threats.

- Critics, including members of the Prime Minister’s Economic Advisory Council, caution against applying rigid risk frameworks to AI, citing its complex and unpredictable nature. They argue that such approaches may be inadequate and potentially risky.

Challenges with AI Transparency:

- Need for Audits:

- Transparency in AI necessitates audits of AI systems to ensure accountability and identify biases.

- However, the absence of mandatory laws governing audits raises concerns about the effectiveness of transparency measures.

- Limitations of Existing Legislation:

- Legislation like the law in New York, mandating audits of automated employment decision tools for bias, has been criticized for lacking enforcement mechanisms.

- Studies, such as one by Cornell, highlight the ineffectiveness of such laws in addressing bias adequately.

- Voluntary Transparency Mechanisms:

- Some companies, including IBM and OpenAI, have voluntarily implemented transparency mechanisms for their AI systems.

- However, reliance on self-regulation raises concerns about conflicts of interest and the reliability of reports produced by the companies themselves.

Addressing Challenges with AI in Democracy:

- Prioritize Existing Democratic Challenges:

- Addressing longstanding issues such as political corruption, voter suppression, and electoral violence should remain a priority, recognizing that AI-related concerns may not be the most pressing in some contexts.

- Balanced Approach to AI Electoral Risks:

- While acknowledging the importance of addressing AI-related electoral risks, regulators should avoid hastily implemented measures that may have unintended consequences.

- Rushed regulations in response to AI concerns could potentially exacerbate existing democratic challenges rather than mitigating them.

- Anticipate Future Risks:

- Regulators should adopt a forward-thinking approach by formulating rules that anticipate and address evolving AI risks beyond the immediate electoral cycle.

- Proactive regulation can help mitigate future threats and ensure the integrity of democratic processes in the long term

Source: https://indianexpress.com/article/opinion/columns/elections-83-countries-2024-ai-freak-out-9167479/

Mains question

Discuss the challenges and considerations in regulating AI’s role in democratic processes, highlighting the need for balanced measures and foresight to address evolving risks effectively. (250 words)