“NAVIGATING THE PERILS OF AI-DRIVEN DECEPTION: SAFEGUARDING DEMOCRACY IN THE DIGITAL AGE”

Syllabus:

- GS 3 : Science and Technology- developments and their applications and effects in everyday life.

- GS4: strengthening of ethical and moral values in governance.

Focus:

India’s CAD has seen a notable decline, dropping from 2% of the GDP in Q3 2022-23 to a mere 1.2% in Q4 2023-24.

Source: IE

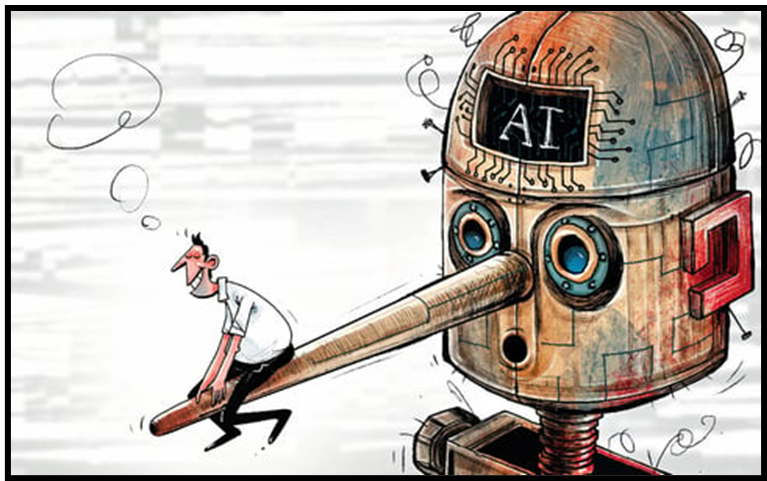

In the landscape of global elections in 2024, boasting over four billion eligible voters, the triumph of democracy faces a significant challenge—the vulnerability of democratic systems in the era of artificial intelligence (AI).

The Rise of Deception in Democracies

- Acceleration of Falsehood: Social media has amplified the spread of falsehoods, and AI has the potential to exacerbate this phenomenon by automating the production and dissemination of deceptive content.

- Emergence of Deepfake Technology: The rise of deepfake technology poses a significant threat to the integrity of democratic processes, allowing malicious actors to create highly realistic fake videos and audio recordings that can be used to spread misinformation and manipulate public opinion.

- Exploitation of Algorithmic Biases: AI algorithms often exhibit biases that can be exploited to amplify divisive or misleading content, leading to the proliferation of misinformation and disinformation across online platforms.

- Manipulation of Social Media Algorithms: Malicious actors exploit the algorithms of social media platforms to amplify deceptive content, manipulate trending topics, and target vulnerable populations.

- Coordinated Disinformation Campaigns: Organized groups and foreign actors engage in coordinated disinformation campaigns to sow confusion, undermine trust in democratic institutions, and manipulate election outcomes.

Challenges Amplified by Decentralization

- Fragmented Platforms: Migration to decentralized platforms like Mastodon creates new frontiers for manipulation, complicating content moderation efforts and making it easier for malicious actors to evade detection and spread deceptive content.

- Erosion of Trust: The decentralization of online platforms has led to a proliferation of echo chambers and filter bubbles, where individuals are exposed primarily to information that aligns with their existing beliefs, further eroding trust in traditional sources of information.

- Lack of Accountability: Decentralized platforms often lack clear lines of accountability, making it difficult to hold platform owners responsible for the spread of misinformation and disinformation on their platforms, exacerbating the challenge of combating deceptive content.

- Limited Oversight: Decentralized platforms operate with limited oversight and regulation, allowing harmful content to flourish unchecked and undermining efforts to combat the spread of misinformation and disinformation.

- Inadequate Content Moderation Tools: Decentralized platforms often lack the robust content moderation tools and resources needed to effectively identify and remove deceptive content, leaving users vulnerable to manipulation and exploitation.

Lessons from Taiwan and EU

- Preemptive Education: Taiwan’s ‘pre-bunking’ strategy, involving public education on deepfakes and other forms of AI-driven deception, demonstrates the efficacy of proactive measures in combating the spread of misinformation and disinformation.

- Regulatory Frameworks: The EU’s Digital Services Act and AI Act provide a blueprint for regulating AI-driven technologies and combating the spread of deceptive content online, emphasizing the importance of transparency, accountability, and user protection.

- International Collaboration: Addressing the challenges posed by AI-driven deception requires international collaboration and coordination, as malicious actors operate across borders and exploit regulatory gaps to evade detection and prosecution.

- Investment in Research and Development: Continued investment in research and development is needed to stay ahead of emerging threats posed by AI-driven deception and develop innovative solutions to protect democratic processes and institutions.

Global best practices by countries to ensure Ethical AI in Governance

|

The Imperative of Global Cooperation

- Universal Principles: There is a growing need for universally agreed-upon principles to guide country-specific legislation and regulatory efforts aimed at combating AI-driven deception and safeguarding the integrity of democratic processes worldwide.

- Capacity Building: Developing countries often lack the resources and expertise to effectively combat AI-driven deception, highlighting the need for capacity-building initiatives and technical assistance to strengthen their cybersecurity capabilities and resilience.

- Multistakeholder Engagement: Combatting AI-driven deception requires the collective efforts of governments, tech companies, civil society organizations, and independent fact-checkers, underscoring the importance of multistakeholder engagement and collaboration in developing effective strategies and solutions.

- Information Sharing: Enhanced information sharing and collaboration between governments and tech companies can help identify and mitigate emerging threats posed by AI-driven deception in real-time.

- Ethical Guidelines: The development and adoption of ethical guidelines for AI research and development are essential to ensure that AI-driven technologies are used responsibly and ethically to protect democratic processes and uphold fundamental rights and freedoms.

As democracies navigate the treacherous waters of the digital age, the need for comprehensive strategies anchored in transparency, education, and global cooperation has never been more pressing. By addressing the vulnerabilities in traditional electoral systems, understanding the challenges posed by AI-driven deception, and learning from successful strategies implemented in countries like Taiwan and the EU, policymakers and stakeholders can work together to safeguard the integrity of democratic processes and ensure that elections remain free, fair, and secure in the face of evolving threats.

Keeping AI’s Future Open: Leveraging the Power of Open Source Ecosystems

|

Source: TheHindu

Mains Practice Question:

Question 1: General Studies Paper 2 (GS2)

“Discuss the implications of the democratisation of deception in the context of electoral processes. How can governments and international bodies collaborate to mitigate the risks posed by AI-driven misinformation and disinformation campaigns? Provide examples and suggest policy measures to ensure the integrity of democratic processes in the digital age.”

Question 2: General Studies Paper 4 (GS4) – Case Study

Case Study:

“In a fictional country, ‘Verdantia,’ upcoming elections are marred by the rampant spread of misinformation and disinformation campaigns orchestrated through AI-driven technologies. Social media platforms are flooded with fake news, deepfake videos, and coordinated bot networks, leading to heightened public distrust in the electoral process. As an ethics advisor to the government of Verdantia, how would you advise policymakers to address this crisis and restore public confidence in the democratic process? Outline a comprehensive strategy encompassing regulatory frameworks, public awareness campaigns, and international cooperation to combat the spread of AI-driven deception and uphold the principles of democracy.”

Associated Articles:

https://universalinstitutions.com/can-ai-be-ethical-and-moral/