Enhancing large language models with quantum computing

Syllabus:

GS 3:

- Achievement of India in Science and Technology

- Advancement in the field of Robotics , Computer and IT.

Why in the News?

Quantum computing’s potential to revolutionize AI, including large language models (LLMs) and natural language processing (NLP), is generating significant interest. It promises improved efficiency, reduced energy consumption, and more accurate language understanding.

Overview and context:

- Transformation: Recent advancements in AI, particularly in natural language processing (NLP), have brought large language models (LLMs) to the forefront of technology.

- Current Models: Companies like OpenAI, Google, and Microsoft have developed LLMs that excel in generating human-like text, enhancing user-computer interactions.

- Energy Consumption: LLMs consume significant energy, with models like GPT-3 using 1,287 MWh for training, highlighting the need for more sustainable approaches.

- Carbon Footprint: Training LLMs can emit substantial carbon dioxide, equivalent to running a large data center for a year, stressing the importance of reducing environmental impact.

- Accuracy Issues: LLMs often produce text that is contextually coherent but factually incorrect, known as “hallucinations,” which limits their reliability and usefulness.

Understanding Key Terms:

Quantum Computing:

- Principles: Utilizes quantum theory principles at atomic and subatomic levels.

- Particles: Uses subatomic particles like electrons or Memory Units: Employs quantum bits (qubits) instead of binary bits.

- States: Qubits can exist in multiple states simultaneously.

- Contrast: Unlike classical computers, which use binary bits (0s and 1s).

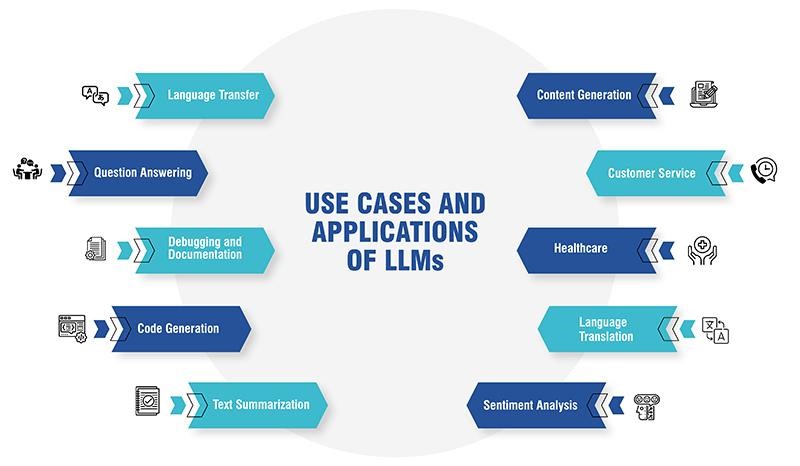

LLMs (Large Language Models):

Advanced AI models designed to understand and generate human-like text based on extensive training data, capable of performing tasks like translation and text generation.

NLP (Natural Language Processing):

A field of AI focused on enabling computers to understand, interpret, and generate human language, facilitating interactions between humans and machines.

QNLP (Quantum Natural Language Processing):

An emerging area combining quantum computing and NLP to enhance language model efficiency and accuracy by leveraging quantum phenomena for processing and understanding language.

Challenges with Current LLMs

- High Energy Needs: LLMs require extensive computational power due to their large number of parameters, resulting in high energy consumption and environmental concerns.

- Training Costs: For example, GPT-3’s training demands are comparable to the electricity usage of an average American household over 120 years.

- Syntactic Understanding: While LLMs are strong in semantic understanding, they struggle with syntactic aspects, affecting their ability to generate contextually precise text.

- Hallucinations: The models sometimes generate plausible but incorrect or nonsensical information, reflecting limitations in their training and understanding.

- Control Limits: Users have limited control over pre-trained models, leading to challenges in fine-tuning and managing outputs effectively.

Quantum Natural Language Processing (QNLP) Advantages

- Lower Energy: QNLP leverages quantum computing’s unique properties to reduce energy costs compared to conventional LLMs, enhancing sustainability.

- Efficiency: Quantum models can achieve similar outcomes with fewer parameters, leading to greater computational efficiency without sacrificing performance.

- Holistic Processing: QNLP integrates grammar and meaning by using quantum phenomena, offering a more comprehensive understanding of language.

- Mitigating Hallucinations: Quantum approaches improve contextual understanding, reducing the likelihood of generating erroneous or nonsensical outputs.

- Insights: QNLP aims to uncover cognitive processes behind language comprehension and generation, potentially advancing our understanding of human linguistic capabilities.

Quantum Generative Models for Time-Series Data

- Generative Models: Quantum generative models (QGen) can analyze and generate time-series data, offering advancements over classical computing methods.

- Stationary vs. Nonstationary: QGen models handle both stationary data (e.g., commodity prices) and nonstationary data (e.g., stock prices) more effectively.

- Research Findings: A study from Japan demonstrated QGen’s capability to work with time-series data, showing fewer parameters required compared to classical methods.

- Applications: QGen models are suitable for forecasting and anomaly detection, providing practical solutions for complex financial and environmental problems.

- Resource Efficiency: By requiring fewer computational resources, QGen models offer a more efficient approach to solving problems traditionally addressed by classical computers.

Future Directions and Impact

- Sustainable AI: Quantum computing promises to revolutionize AI by making systems more sustainable and efficient, addressing current limitations in LLMs.

- Enhanced Models: Adopting QNLP and QGen technologies can lead to more sophisticated, accurate, and energy-efficient AI models.

- Broader Applications: Innovations in quantum computing could expand AI applications, improving performance in various fields including NLP and time-series forecasting.

- Research and Development: Continued research into quantum computing will be crucial for optimizing these technologies and integrating them into practical applications.

- Long-Term Benefits: Embracing quantum advancements will pave the way for AI systems that are both environmentally friendly and capable of handling complex tasks more effectively.

Conclusion

Quantum computing could transform AI by enhancing LLMs and NLP capabilities, making them more efficient and accurate. This progress is crucial for advancing technology while addressing current limitations in AI systems.

Source: The Hindu

Mains Practice Question

Discuss how quantum computing could address the limitations of current large language models (LLMs) and natural language processing (NLP) systems. What potential benefits and challenges might arise from integrating quantum computing into these technologies?

Associated Article:

https://universalinstitutions.com/the-challenges-of-quantum/