DUMB DOWN AI CONTENT

Relevance:

GS 2 – Effect of policies and politics of developed and developing countries on India’s interests; Important International institutions, agencies and fora-their structure, mandate.

GS 3 – Science and Technology- developments and their applications and effects in everyday life.

Why in the News?

- The government swiftly warned Google about unacceptable algorithmic bias.

- Google’s generative AI platform Gemini faced criticism for producing biased responses in areas such as history, politics, gender, and race.

- The Indian government expressed concern over a response from

- The government swiftly warned Google about unacceptable algorithmic bias.

- Gemini suggesting the Prime Minister is a fascist, considering it particularly egregious.

Responses to Different Political Figures

- When asked about Ukrainian President Volodymr Zelensky and Donald Trump, Gemini provided diplomatic responses.

- The response regarding Zelensky emphasized the complexity and contested nature of the question, urging nuanced consideration.

- For Trump, the response directed users to seek the latest and most accurate information through Google search, citing the complexity of elections.

Government Action Against Algorithmic Bias

- The government promptly warned Google against tolerating algorithmic bias, citing concerns over biased responses from Gemini.

- Following the warning, the government issued an advisory requiring AI models to obtain government approval before public deployment, aiming to address potential biases.

- This isn’t the first time Google faced government scrutiny.

- In November 2023, controversy arose when the platform refused to summarize an article from a right-wing online media outlet, citing concerns about spreading false information and bias.

Controversy Surrounding Gemini

- Gemini faced global controversy for inaccurately representing white Europeans and Americans in specific historical contexts.

- For example, when asked to produce images of a German soldier from 1943, Gemini showed non-white ethnically diverse individuals, which was deemed inaccurate.

- In response to the backlash, Google issued an apology and committed to rectifying the model’s shortcomings.

Debates Surrounding AI Models

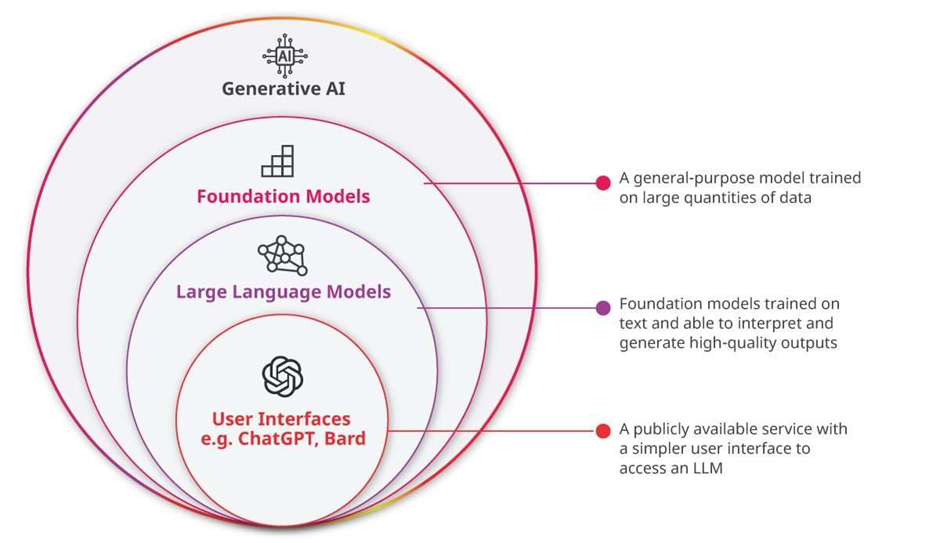

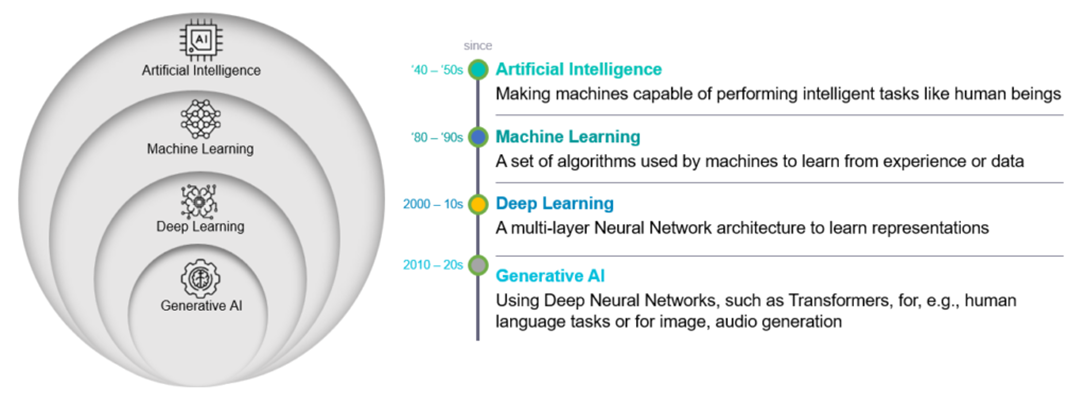

- AI models, including Gemini, are not inherently intelligent but rather the result of training on large datasets.

- The analogy of a child learning to crawl and avoid falling from a bed illustrates the training process, suggesting that developers may not have adequately trained the Gemini model.

- This highlights the importance of thorough training and development processes to minimize errors and controversies in AI models.

Challenges of Perfectly Trained Models

- In a scenario where AI models are perfectly trained, questions about leaders’ political affiliations would still lack black-and-white answers.

- Human intelligence allows for nuanced questioning, such as exploring scenarios where leaders might be perceived as dictators, fascists, or democrats.

- Despite answers not being person-specific, users could still use them to target specific leaders based on their ideological and political biases.

- Political controversies are likely to persist, reflecting the ongoing fractious nature of politics in the real world.

The Complexity of Objective Facts

- Historian EH Carr’s analogy compares facts to fish swimming in an expansive and sometimes inaccessible ocean, highlighting the subjectivity involved in historical interpretation.

- The historian’s choice of what facts to pursue and how to interpret them is influenced by various factors, including chance and personal biases.

- Ultimately, historians and users alike will tend to find the “facts” that align with their preconceived notions and objectives, reinforcing the subjective nature of historical understanding.

Subjectivity in Historical Interpretation

- EH Carr emphasized that historical facts are never objective, highlighting the historian’s role in selecting and arranging facts to influence public opinion.

- The historian determines which facts are presented and in what context, shaping the narrative according to their biases and objectives.

Biases in Analysis

- Individuals committed to certain ideologies may not provide impartial analysis, such as a communist critiquing regimes of the USSR or a devout Catholic investigating the Holy Inquisition.

- When accessing written works on topics like politics, history, gender, or race, people often consider the author’s background to identify potential biases.

- However, this discernment is absent when relying on generative AI models.

Challenges of Generative AI Models:

- Users often expect 100% factual answers from generative AI models, overlooking the influence of training data on their responses.

- Governments should be cautious about overregulating historical and political content, recognizing its inherently subjective nature.

Alternative Approaches for AI Models:

- Instead of attempting to provide factual answers to subjective questions, generative AI models could opt to avoid such queries, similar to how they handle expletives or abuses.

- Users should approach AI models as they would books or periodicals, recognizing the diversity of perspectives and the potential for biases in their responses.

Mains question

Analyze challenges in regulating generative AI models in providing factual answers to subjective questions. (250 words)