Concerns of Misuse of AI in Academia

Syllabus:

- GS 3: Artificial Intelligence

Why in the News?

Recently, law student’s petition against a private university in Punjab and Haryana High Court for failing him due to use of AI in academic project and research, raises significant ethical challenges for the use of genetic AI in education.

Introduction

- Advent of Generative AI (GenAI) has transformed learning, offering both opportunities and challenges.

- While enhancing teaching and research, its misuse raises ethical concerns.

- Institutions need to take a balanced approach, ensure sound research, clear guidelines on the use of AI, and advances in academic research to keep pace with evolving technological realities.

AI Reshaping Academics

- Generative AI (GenAI) is reshaping education and research, but it has raised important questions about ethics and fairness.

Lawsuit reveals challenges

- Law student has filed a petition in the Punjab and Haryana High Court after failed for using AI in Academics and project work.

- The student claimed institution has no evidence and violated the law of natural justice.

- Later, university informed the High court that the student had passed, and the petition was dismissed.

AI and Academics

Advantages of GenAI

- Advanced Learning: GenAI tools can support learning by providing explanations, summaries, and examples, making complex thinking easier

- Time management: These tools can perform time-consuming tasks such as writing reports, coming up with research ideas, or solving problems, allowing students and researchers to focus on their field of work on deeper levels.

- Creative support: GenAI can help with creative processes such as writing, planning, brainstorming, etc., providing new ideas and inspiration that users can build on.

- Language support: GenAI tools can help with language translation, grammar correction, and improving text clarity, helping non-native speakers or those struggling with writing skills.

- Personalized learning: These tools can address individual learning styles, providing customized content and instruction that enhances individual learning experiences, especially in self-paced learning environments.

- When used appropriately, GenAI can enhance learning and communication as a complementary tool.

Concerns regarding misuse

- Lacking originality and issue of Plagiarism: Students may use GenAI for information or activities that they do not fully understand, resulting in plagiarism and lack of genuine intellectual participation.

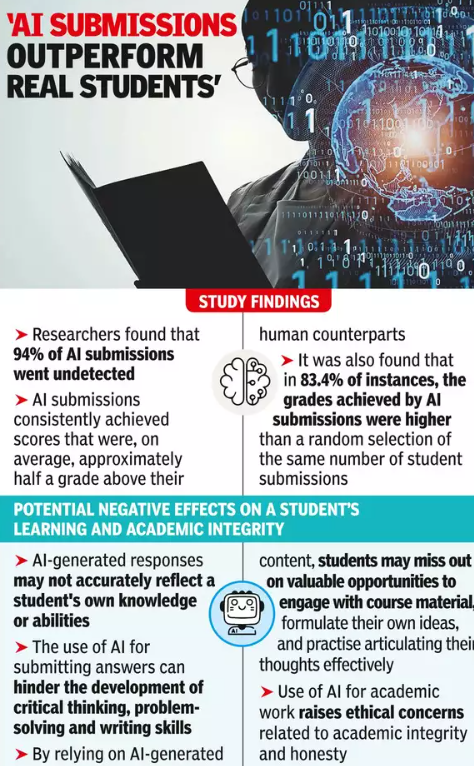

- Damage to critical thinking: Over-reliance on GenAI tools can hinder the development of critical thinking and problem-solving skills, as students may rely on AI instead of developing on their own issues.

- Inaccurate or biased information: GenAI may create inaccurate or biased information, and if not properly acknowledged it can spread misinformation.

- Lacking authenticity in research: AI tools can be misused by researchers to rapidly generate research ideas or papers, compromising the authenticity and rigor of academic work

- Inequality in access: If students do not have access to advanced AI tools, they can be disadvantaged, leading to inequality in academic achievement and opportunities.

- Uncontrolled use of GenAI undermines academic integrity and broader educational goals.

- Increasing Challenges: Academic publications and organizations are now facing issues related to the gap between real presentations and AI-generated presentations.

Institutional responses on AI misuse

- Reporting Methods: Many organizations in India continue to use outdated analytics methods, ignoring the impact of AI tools.

- Over-reliance on AI detectors: Some organizations rely heavily on tools like the Turnitin AI Detector, which can be unreliable due to false positives, especially with altered products AI brings it to the table.

Issues of AI detection in education

- Fake positives: Scientific research shows that many AI detection tools often yield false positives, with human-authored work incorrectly attributed to AI.

- Assessment or Probability: These tools rely on probabilities, reducing the accuracy of users when preparing AI-generated drafts.

Impact of human intervention

- AI-encoded features can easily be recognized by Machines but reliability decreases as humans interact with AI-generated features.

- Lacks confidence: Over-reliance on machine-generated reports can lead to inappropriate conclusions.

Opens up dialogue within organizations

- Clear guidelines are needed: As AI tools are increasingly integrated with word processing and other learning tools, it is important for organizations to define what constitutes permissible use of this technology.

- Preventing Errors: Without clear guidelines, students and researchers may inadvertently misuse AI tools, resulting in unintended negative academic behavior, if clear boundaries are established, it can help avoid these types of issues.

- Inter-organization Dialogue: Educational institutions need to start talking about the role of AI in academic work.

- These discussions can lead to blueprint of guidelines for the use of AI as well as specific guidelines tailored to specific topics.

Supplement written presentation with verbal examination

- General Assessment: To better evaluate students and researchers, institutions may submit oral examination results along with written essays. Oral testing can help assess students’ understanding and originality, reducing the risk of misuse of AI.

- Faculty workload Increased: However, conducting oral examinations requires more time and effort from faculty and examiners. Organizations need to incorporate this extra work into their planning.

- Balancing quantity and quality of work: While incorporating oral examinations can be beneficial for better academic assessment, it is important for institutions to balance faculty workload to ensure it to introduce appropriate teaching and research.

Role of law enforcement agencies

- Role of UGC and AICTE: Regulatory bodies like the University Grants Commission (UGC) and the All India Council of Technical Education (AICTE) have played an important role in facilitating this transition.

- Clear policies and procedures should be provided for the use of AI tools in an academic environment.

- Promoting the ethical use of AI: Regulators should also encourage academic institutions to adopt ethical standards for the use of AI, ensuring that all students and researchers follow appropriate guidelines.

Demonstrating the use of AI in academic work

- Disclosure is mandatory: Students and researchers should be required to disclose any AI tools used in their academic work. This includes identifying the purpose of the tool and how it was used in the presentation.

- Transparency and Accountability: Disclosure requirements ensure clarity on how AI is used in education.

- Facilitate fair decision-making: Based on this disclosure, educational institutions can make fair and balanced decisions about allegations of AI misuse.

- Review committees can assess whether the use of AI violated organizational guidelines or was an acceptable contribution.

- Using version history: Students and researchers should also keep a record of their content.

- Tools such as version history in word processors (e.g. Microsoft Word) can help track changes made during the writing process.

- It can be evidence of parts of originally written by someone and parts modified with AI tools.

Conclusion

Integrating AI into education presents significant challenges, but with clear, transparent guidelines, and effective regulation, institutions can ensure responsible use by encouraging dialogue understanding, adopting oral tests, encouraging disclosure, and relying on tools to track change in order to navigate smoothly in the world of AI.

Source: Indian Express

Mains Practice Question:

In the ongoing debate over the use of AI in education, discuss the shortcomings of current tools for AI assessment. How can organizations develop strategies that balance innovation and academic integrity?