AI and the environment: What are the pitfalls?

Relevance: GS Paper – 3, Robotics, Artificial Intelligence, Scientific Innovations & Discoveries, IT & Computers, GS Paper – 2, Government Policies & Interventions.

Recent Context:

Recently, the field of artificial intelligence is booming. It has captured the public imagination with its ability to converse, write code, and compose poetry and essays in a surprisingly human way.

What is AI?

About AI:

-

- AI is the ability of a computer, or a robot controlled by a computer to do tasks that are usually done by humans because they require human intelligence and discernment.

- Although there is no AI that can perform the wide variety of tasks an ordinary human can do, some AI can match humans in specific tasks.

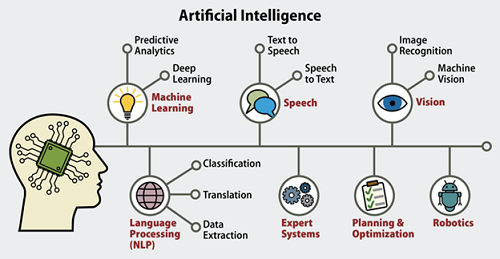

Characteristics & Components:

- The ideal characteristic of artificial intelligence is its ability to rationalize and take actions that have the best chance of achieving a specific goal. A subset of AI is Machine Learning (ML).

- Deep Learning (DL) techniques enable this automatic learning through the absorption of huge amounts of unstructured data such as text, images, or video.

-

-

How is Global AI Currently Governed?

India and AI:

- NITI Aayog, has issued some guiding documents on AI Issues such as the National Strategy for AI and the Responsible AI for All report.

- Emphasizes social and economic inclusion, innovation, and trustworthiness.

United Kingdom:

- Outlined a light-touch approach, asking regulators in different sectors to apply existing regulations to AI.

- Published a white paper outlining five principles companies should follow: safety, security and robustness; transparency and explainability; fairness; accountability and governance; and contestability and redress.

US:

- The US released a Blueprint for an AI Bill of Rights (AIBoR), outlining the harms of AI to economic and civil rights and lays down five principles for mitigating these harms.

- The Blueprint, instead of a horizontal approach like the EU, endorses a sectorally specific approach to AI governance, with policy interventions for individual sectors such as health, labor, and education, leaving it to sectoral federal agencies to come out with their plans.

China:

- In 2022, China came out with some of the world’s first nationally binding regulations targeting specific types of algorithms and AI.

- It enacted a law to regulate recommendation algorithms with a focus on how they disseminate information.

What are the Differences Between AI, ML and DL?

- The term AI, coined in the 1950s, refers to the simulation of human intelligence by machines. AI, ML and DL are common terms and are sometimes used interchangeably. But there are distinctions.

- ML is a subset of AI that involves the development of algorithms that allow computers to learn from data without being explicitly programmed.

- ML algorithms can analyze data, identify patterns, and make predictions based on the patterns they find.

- DL is a subset of ML that uses artificial neural networks to learn from data in a way that is similar to how the human brain learns.

What are the Different Categories of AI?

Artificial intelligence can be divided into two different categories:

- Weak AI/ Narrow AI: It is a type of AI that is limited to a specific or narrow area. Weak AI simulates human cognition. It has the potential to benefit society by automating time-consuming tasks and by analyzing data in ways that humans sometimes can’t. For example, video games such as chess and personal assistants such as Amazon’s Alexa and Apple’s Siri.

- Strong AI: These are systems that carry on tasks considered to be human-like. These tend to be more complex and complicated systems. They are programmed to handle situations in which they may be required to problem-solve without having a person intervene. These kinds of systems can be found in applications like self-driving cars.

-

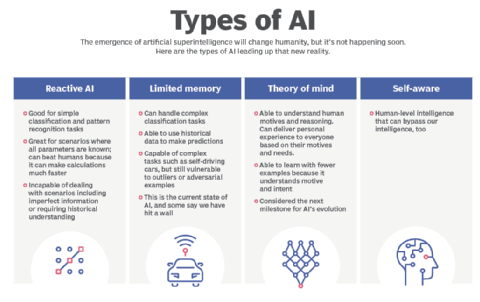

What are the Different Types of AI?

- Reactive AI: It uses algorithms to optimize outputs based on a set of inputs. Chess-playing AI, for example, are reactive systems that optimize the best strategy to win the game. Reactive AI tends to be fairly static, unable to learn or adapt to novel situations. Thus, it will produce the same output given identical inputs.

- Limited Memory AI: It can adapt to past experiences or update itself based on new observations or data. Often, the amount of updating is limited, and the length of memory is relatively short. Autonomous vehicles, for example, can read the road and adapt to novel situations, even learning from past experience.

- Theory-of-mind AI: They are fully adaptive and have an extensive ability to learn and retain past experiences. These types of AI include advanced chat-bots that could pass the Turing Test, fooling a person into believing the AI was a human being. A Turing test is a method of inquiry in AI for determining whether or not a computer is capable of thinking like a human being.

- Self-aware AI: As the name suggests, become sentient and aware of their own existence. Still, in the realm of science fiction, some experts believe that an AI will never become conscious or alive.

Upcoming challenges with the development of AI in future:

- Investment in artificial intelligence is growing rapidly. The global AI market is currently valued at $142.3 billion (€129.6 billion), and is expected to grow to nearly $2 trillion by 2030.

- AI systems are already a big part of our lives, helping governments, industries and regular people be more efficient and make data-driven decisions.

- But there are some significant downsides to this technology. Environment sustainability is one the major challenges with the development and use of AI.

AI has a big carbon footprint:

- In order to carry out the tasks they’re supposed to, AI models need to process with bulk of data to learn to recognize an image of a car, for example, an algorithm will need to churn through millions of pictures of cars. Or in the case of ChatGPT, it’s fed colossal text databases from the internet to learn to handle human language. This data crunching happens in data centers. It requires a lot of computing power and is energy-intensive.

- “The entire data center infrastructure and data submission networks account for 2-4% of global CO2 emissions,”. This is not only AI, but AI is a large part of that.” That’s on a par with aviation industry emissions. It’s important to note that the Massachusetts study’s estimate was for an especially energy-intensive AI model.

- Smaller models can run on a laptop and use less energy. But those that use deep learning, such as algorithms that curate social media content, or ChatGPT, need a significant amount of computing power.

So what can be done to tackle AI’s footprint?

- Environmental concerns need to be taken into account right from the start in the algorithm design and training phases.

- There is need to consider the entire production chain and all the environmental problems that are connected to this chain, most notably energy consumption and emissions, but also material toxicity and electronic waste.

- Rather than building bigger and bigger AI models, as is the current trend, companies could scale them down, use smaller data sets and ensure the AI is trained on the most efficient hardware available.

- Using data centers in regions that rely on renewable energy and don’t require huge amounts of water for cooling could also make a difference. As, huge facilities in parts of the US or Australia, where fossil fuels make up a significant chunk of the energy mix, will produce more emissions than in Iceland, where geothermal power is a main source of energy and lower temperatures make cooling servers easier.

- Energy isn’t the only consideration. The huge amount of water data centers need to prevent their facilities from overheating has raised concerns in some water-stressed regions, such as Santiago, Chile.

Emissions aside, is there a need for efficient use of AI for environment protection?

- Even if big tech companies shrink AI’s energy use, there’s another issue that is potentially more damaging to the environment.

- There should be more focus on the way AI is being used to speed up activities that contribute to counter climate change.

- For example e use of AI for advertising. These are deliberately “designed to increase consumption”, which assuredly comes with a very significant climate cost,”

- Google has since said it will no longer build customized AI tools to help companies extract fossil fuels.

Conclusion:

- The role of artificial intelligence is only likely to become more significant in the future. And keeping up with such rapidly advancing technology will be a challenge.

- Therefore, regulation is crucial to ensuring AI development is sustainable and doesn’t make emissions targets harder to reach.

- Along with it, governments should also make aware about how to deal with AI — to encourage innovation in the field and reap the benefits this new technology brings, while avoiding the potential dangers and protecting citizens.

Source: https://indianexpress.com/article/explained/explained-climate/ai-environment-pitfalls-8881080/